|

| December 27, 2016 | Volume 12 Issue 48 |

Electrical/Electronic News & Products

Designfax weekly eMagazine

Archives

Partners

Manufacturing Center

Product Spotlight

Modern Applications News

Metalworking Ideas For

Today's Job Shops

Tooling and Production

Strategies for large

metalworking plants

Intro to reed switches, magnets, magnetic fields

This brief introductory video on the DigiKey site offers tips for engineers designing with reed switches. Dr. Stephen Day, Ph.D. from Coto Technology gives a solid overview on reed switches -- complete with real-world application examples -- and a detailed explanation of how they react to magnetic fields.

This brief introductory video on the DigiKey site offers tips for engineers designing with reed switches. Dr. Stephen Day, Ph.D. from Coto Technology gives a solid overview on reed switches -- complete with real-world application examples -- and a detailed explanation of how they react to magnetic fields.

View the video.

Bi-color LEDs to light up your designs

Created with engineers and OEMs in mind, SpectraBright Series SMD RGB and Bi-Color LEDs from Visual Communi-cations Company (VCC) deliver efficiency, design flexibility, and control for devices in a range of industries, including mil-aero, automated guided vehicles, EV charging stations, industrial, telecom, IoT/smart home, and medical. These 50,000-hr bi-color and RGB options save money and space on the HMI, communicating two or three operating modes in a single component.

Created with engineers and OEMs in mind, SpectraBright Series SMD RGB and Bi-Color LEDs from Visual Communi-cations Company (VCC) deliver efficiency, design flexibility, and control for devices in a range of industries, including mil-aero, automated guided vehicles, EV charging stations, industrial, telecom, IoT/smart home, and medical. These 50,000-hr bi-color and RGB options save money and space on the HMI, communicating two or three operating modes in a single component.

Learn more.

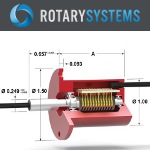

All about slip rings: How they work and their uses

Rotary Systems has put together a really nice basic primer on slip rings -- electrical collectors that carry a current from a stationary wire into a rotating device. Common uses are for power, proximity switches, strain gauges, video, and Ethernet signal transmission. This introduction also covers how to specify, assembly types, and interface requirements. Rotary Systems also manufactures rotary unions for fluid applications.

Rotary Systems has put together a really nice basic primer on slip rings -- electrical collectors that carry a current from a stationary wire into a rotating device. Common uses are for power, proximity switches, strain gauges, video, and Ethernet signal transmission. This introduction also covers how to specify, assembly types, and interface requirements. Rotary Systems also manufactures rotary unions for fluid applications.

Read the overview.

Seifert thermoelectric coolers from AutomationDirect

Automation-Direct has added new high-quality and efficient stainless steel Seifert 340 BTU/H thermoelectric coolers with 120-V and 230-V power options. Thermoelectric coolers from Seifert use the Peltier Effect to create a temperature difference between the internal and ambient heat sinks, making internal air cooler while dissipating heat into the external environment. Fans assist the convective heat transfer from the heat sinks, which are optimized for maximum flow.

Automation-Direct has added new high-quality and efficient stainless steel Seifert 340 BTU/H thermoelectric coolers with 120-V and 230-V power options. Thermoelectric coolers from Seifert use the Peltier Effect to create a temperature difference between the internal and ambient heat sinks, making internal air cooler while dissipating heat into the external environment. Fans assist the convective heat transfer from the heat sinks, which are optimized for maximum flow.

Learn more.

EMI shielding honeycomb air vent panel design

Learn from the engineering experts at Parker how honeycomb air vent panels are used to help cool electronics with airflow while maintaining electromagnetic interference (EMI) shielding. Topics include: design features, cell size and thickness, platings and coatings, and a stacked design called OMNI CELL construction. These vents can be incorporated into enclosures where EMI radiation and susceptibility is a concern or where heat dissipation is necessary. Lots of good info.

Learn from the engineering experts at Parker how honeycomb air vent panels are used to help cool electronics with airflow while maintaining electromagnetic interference (EMI) shielding. Topics include: design features, cell size and thickness, platings and coatings, and a stacked design called OMNI CELL construction. These vents can be incorporated into enclosures where EMI radiation and susceptibility is a concern or where heat dissipation is necessary. Lots of good info.

Read the Parker blog.

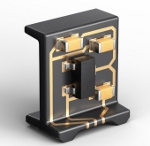

What is 3D-MID? Molded parts with integrated electronics from HARTING

3D-MID (three-dimensional mechatronic integrated devices) technology combines electronic and mechanical functionalities into a single, 3D component. It replaces the traditional printed circuit board and opens up many new opportunities. It takes injection-molded parts and uses laser-direct structuring to etch areas of conductor structures, which are filled with a copper plating process to create very precise electronic circuits. HARTING, the technology's developer, says it's "Like a PCB, but 3D." Tons of possibilities.

3D-MID (three-dimensional mechatronic integrated devices) technology combines electronic and mechanical functionalities into a single, 3D component. It replaces the traditional printed circuit board and opens up many new opportunities. It takes injection-molded parts and uses laser-direct structuring to etch areas of conductor structures, which are filled with a copper plating process to create very precise electronic circuits. HARTING, the technology's developer, says it's "Like a PCB, but 3D." Tons of possibilities.

View the video.

Loss-free conversion of 3D/CAD data

CT CoreTech-nologie has further developed its state-of-the-art CAD converter 3D_Evolution and is now introducing native interfaces for reading Solidedge and writing Nx and Solidworks files. It supports a wide range of formats such as Catia, Nx, Creo, Solidworks, Solidedge, Inventor, Step, and Jt, facilitating smooth interoperability between different systems and collaboration for engineers and designers in development environments with different CAD systems.

CT CoreTech-nologie has further developed its state-of-the-art CAD converter 3D_Evolution and is now introducing native interfaces for reading Solidedge and writing Nx and Solidworks files. It supports a wide range of formats such as Catia, Nx, Creo, Solidworks, Solidedge, Inventor, Step, and Jt, facilitating smooth interoperability between different systems and collaboration for engineers and designers in development environments with different CAD systems.

Learn more.

Top 5 reasons for solder joint failure

Solder joint reliability is often a pain point in the design of an electronic system. According to Tyler Ferris at ANSYS, a wide variety of factors affect joint reliability, and any one of them can drastically reduce joint lifetime. Properly identifying and mitigating potential causes during the design and manufacturing process can prevent costly and difficult-to-solve problems later in a product lifecycle.

Solder joint reliability is often a pain point in the design of an electronic system. According to Tyler Ferris at ANSYS, a wide variety of factors affect joint reliability, and any one of them can drastically reduce joint lifetime. Properly identifying and mitigating potential causes during the design and manufacturing process can prevent costly and difficult-to-solve problems later in a product lifecycle.

Read this informative ANSYS blog.

Advanced overtemp detection for EV battery packs

Littelfuse has introduced TTape, a ground-breaking over-temperature detection platform designed to transform the management of Li-ion battery systems. TTape helps vehicle systems monitor and manage premature cell aging effectively while reducing the risks associated with thermal runaway incidents. This solution is ideally suited for a wide range of applications, including automotive EV/HEVs, commercial vehicles, and energy storage systems.

Littelfuse has introduced TTape, a ground-breaking over-temperature detection platform designed to transform the management of Li-ion battery systems. TTape helps vehicle systems monitor and manage premature cell aging effectively while reducing the risks associated with thermal runaway incidents. This solution is ideally suited for a wide range of applications, including automotive EV/HEVs, commercial vehicles, and energy storage systems.

Learn more.

Benchtop ionizer for hands-free static elimination

EXAIR's Varistat Benchtop Ionizer is the latest solution for neutralizing static on charged surfaces in industrial settings. Using ionizing technology, the Varistat provides a hands-free solution that requires no compressed air. Easily mounted on benchtops or machines, it is manually adjustable and perfect for processes needing comprehensive coverage such as part assembly, web cleaning, printing, and more.

EXAIR's Varistat Benchtop Ionizer is the latest solution for neutralizing static on charged surfaces in industrial settings. Using ionizing technology, the Varistat provides a hands-free solution that requires no compressed air. Easily mounted on benchtops or machines, it is manually adjustable and perfect for processes needing comprehensive coverage such as part assembly, web cleaning, printing, and more.

Learn more.

LED light bars from AutomationDirect

Automation-Direct adds CCEA TRACK-ALPHA-PRO series LED light bars to expand their offering of industrial LED fixtures. Their rugged industrial-grade anodized aluminum construction makes TRACKALPHA-PRO ideal for use with medium to large-size industrial machine tools and for use in wet environments. These 120 VAC-rated, high-power LED lights provide intense, uniform lighting, with up to a 4,600-lumen output (100 lumens per watt). They come with a standard bracket mount that allows for angle adjustments. Optional TACLIP mounts (sold separately) provide for extra sturdy, vibration-resistant installations.

Automation-Direct adds CCEA TRACK-ALPHA-PRO series LED light bars to expand their offering of industrial LED fixtures. Their rugged industrial-grade anodized aluminum construction makes TRACKALPHA-PRO ideal for use with medium to large-size industrial machine tools and for use in wet environments. These 120 VAC-rated, high-power LED lights provide intense, uniform lighting, with up to a 4,600-lumen output (100 lumens per watt). They come with a standard bracket mount that allows for angle adjustments. Optional TACLIP mounts (sold separately) provide for extra sturdy, vibration-resistant installations.

Learn more.

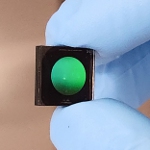

World's first metalens fisheye camera

2Pi Optics has begun commercial-ization of the first fisheye camera based on the company's proprietary metalens technology -- a breakthrough for electronics design engineers and product managers striving to miniaturize the tiny digital cameras used in advanced driver-assistance systems (ADAS), AR/VR, UAVs, robotics, and other industrial applications. This camera can operate at different wavelengths -- from visible, to near IR, to longer IR -- and is claimed to "outperform conventional refractive, wide-FOV optics in all areas: size, weight, performance, and cost."

2Pi Optics has begun commercial-ization of the first fisheye camera based on the company's proprietary metalens technology -- a breakthrough for electronics design engineers and product managers striving to miniaturize the tiny digital cameras used in advanced driver-assistance systems (ADAS), AR/VR, UAVs, robotics, and other industrial applications. This camera can operate at different wavelengths -- from visible, to near IR, to longer IR -- and is claimed to "outperform conventional refractive, wide-FOV optics in all areas: size, weight, performance, and cost."

Learn more.

Orbex offers two fiber optic rotary joint solutions

Orbex Group announces its 700 Series of fiber optic rotary joint (FORJ) assemblies, supporting either single or multi-mode operation ideal for high-speed digital transmission over long distances. Wavelengths available are 1,310 or 1,550 nm. Applications include marine cable reels, wind turbines, robotics, and high-def video transmission. Both options feature an outer diameter of 7 mm for installation in tight spaces. Construction includes a stainless steel housing.

Orbex Group announces its 700 Series of fiber optic rotary joint (FORJ) assemblies, supporting either single or multi-mode operation ideal for high-speed digital transmission over long distances. Wavelengths available are 1,310 or 1,550 nm. Applications include marine cable reels, wind turbines, robotics, and high-def video transmission. Both options feature an outer diameter of 7 mm for installation in tight spaces. Construction includes a stainless steel housing.

Learn more.

Mini tunnel magneto-resistance effect sensors

Littelfuse has released its highly anticipated 54100 and 54140 mini Tunnel Magneto-Resistance (TMR) effect sensors, offering unmatched sensitivity and power efficiency. The key differentiator is their remarkable sensitivity and 100x improvement in power efficiency compared to Hall Effect sensors. They are well suited for applications in position and limit sensing, RPM measurement, brushless DC motor commutation, and more in various markets including appliances, home and building automation, and the industrial sectors.

Littelfuse has released its highly anticipated 54100 and 54140 mini Tunnel Magneto-Resistance (TMR) effect sensors, offering unmatched sensitivity and power efficiency. The key differentiator is their remarkable sensitivity and 100x improvement in power efficiency compared to Hall Effect sensors. They are well suited for applications in position and limit sensing, RPM measurement, brushless DC motor commutation, and more in various markets including appliances, home and building automation, and the industrial sectors.

Learn more.

Panasonic solar and EV components available from Newark

Newark has added Panasonic Industry's solar inverters and EV charging system components to their power portfolio. These best-in-class products help designers meet the growing global demand for sustainable and renewable energy mobility systems. Offerings include film capacitors, power inductors, anti-surge thick film chip resistors, graphite thermal interface materials, power relays, capacitors, and wireless modules.

Newark has added Panasonic Industry's solar inverters and EV charging system components to their power portfolio. These best-in-class products help designers meet the growing global demand for sustainable and renewable energy mobility systems. Offerings include film capacitors, power inductors, anti-surge thick film chip resistors, graphite thermal interface materials, power relays, capacitors, and wireless modules.

Learn more.

Machine vision takes a leap forward: Computer learns to recognize sounds by watching video

MIT researchers' neural network was fed video from 26 TB of video data downloaded from the photo-sharing site Flickr. Researchers found the network can interpret natural sounds in terms of image categories, like the sound of birdsong tends to be associated with forest scenes and pictures of trees, birds, birdhouses, and bird feeders. [Image: Jose-Luis Olivares/MIT]

By Larry Hardesty, MIT

In recent years, computers have gotten remarkably good at recognizing speech and images: Think of the dictation software on most cellphones, or the algorithms that automatically identify people in photos posted to Facebook.

But recognition of natural sounds -- such as crowds cheering or waves crashing -- has lagged behind. That's because most automated recognition systems, whether they process audio or visual information, are the result of machine learning, in which computers search for patterns in huge compendia of training data. Usually, the training data has to be first annotated by hand, which is prohibitively expensive for all but the highest-demand applications.

Sound recognition may be catching up, however, thanks to researchers at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL). At the Neural Information Processing Systems conference held earlier this month, they presented a sound-recognition system that outperforms its predecessors but didn't require hand-annotated data during training.

Instead, the researchers trained the system on video. First, existing computer vision systems that recognize scenes and objects categorized the images in the video. The new system then found correlations between those visual categories and natural sounds.

"Computer vision has gotten so good that we can transfer it to other domains," says Carl Vondrick, an MIT graduate student in electrical engineering and computer science and one of the paper's two first authors. "We're capitalizing on the natural synchronization between vision and sound. We scale up with tons of unlabeled video to learn to understand sound."

The researchers tested their system on two standard databases of annotated sound recordings, and it was between 13 and 15 percent more accurate than the best-performing previous system. On a data set with 10 different sound categories, it could categorize sounds with 92 percent accuracy, and on a data set with 50 categories it performed with 74 percent accuracy. On those same data sets, humans are 96 percent and 81 percent accurate, respectively.

"Even humans are ambiguous," says Yusuf Aytar, the paper's other first author and a postdoc in the lab of MIT professor of electrical engineering and computer science Antonio Torralba. Torralba is the final co-author on the paper.

"We did an experiment with Carl [Vondrick]," Aytar says. "Carl was looking at the computer monitor, and I couldn't see it. He would play a recording and I would try to guess what it was. It turns out this is really, really hard. I could tell indoor from outdoor, basic guesses, but when it comes to the details -- ‘Is it a restaurant?' -- those details are missing. Even for annotation purposes, the task is really hard."

Complementary modalities

Because it takes far less power to collect and process audio data than it does to collect and process visual data, the researchers envision that a sound-recognition system could be used to improve the context sensitivity of mobile devices.

When coupled with GPS data, for instance, a sound-recognition system could determine that a cellphone user is in a movie theater and that the movie has started, and the phone could automatically route calls to a prerecorded outgoing message. Similarly, sound recognition could improve the situational awareness of autonomous robots.

"For instance, think of a self-driving car," Aytar says. "There's an ambulance coming, and the car doesn't see it. If it hears it, it can make future predictions for the ambulance -- which path it's going to take -- just purely based on sound."

Visual language

The researchers' machine-learning system is a neural network, so called because its architecture loosely resembles that of the human brain. A neural net consists of processing nodes that, like individual neurons, can perform only rudimentary computations but are densely interconnected. Information -- say, the pixel values of a digital image -- is fed to the bottom layer of nodes, which processes it and feeds it to the next layer, which processes it and feeds it to the next layer, and so on. The training process continually modifies the settings of the individual nodes, until the output of the final layer reliably performs some classification of the data -- say, identifying the objects in the image.

Vondrick, Aytar, and Torralba first trained a neural net on two large, annotated sets of images: one, the ImageNet data set, contains labeled examples of images of 1,000 different objects; the other, the Places data set created by Oliva's group and Torralba's group, contains labeled images of 401 different scene types, such as a playground, bedroom, or conference room.

Once the network was trained, the researchers fed it the video from 26 terabytes of video data downloaded from the photo-sharing site Flickr. "It's about 2 million unique videos," Vondrick says. "If you were to watch all of them back to back, it would take you about two years." Then they trained a second neural network on the audio from the same videos. The second network's goal was to correctly predict the object and scene tags produced by the first network.

The result was a network that could interpret natural sounds in terms of image categories. For instance, it might determine that the sound of birdsong tends to be associated with forest scenes and pictures of trees, birds, birdhouses, and bird feeders.

Benchmarking

To compare the sound-recognition network's performance to that of its predecessors, however, the researchers needed a way to translate its language of images into the familiar language of sound names. So they trained a simple machine-learning system to associate the outputs of the sound-recognition network with a set of standard sound labels.

For that, the researchers did use a database of annotated audio -- one with 50 categories of sound and about 2,000 examples. Those annotations had been supplied by humans. But it's much easier to label 2,000 examples than to label 2 million. And the MIT researchers' network, trained first on unlabeled video, significantly outperformed all previous networks trained solely on the 2,000 labeled examples.

"With the modern machine-learning approaches, like deep learning, you have many, many trainable parameters in many layers in your neural-network system," says Mark Plumbley, a professor of signal processing at the University of Surrey. "That normally means that you have to have many, many examples to train that on. And we have seen that sometimes there's not enough data to be able to use a deep-learning system without some other help. Here the advantage is that they are using large amounts of other video information to train the network and then doing an additional step where they specialize the network for this particular task. That approach is very promising because it leverages this existing information from another field."

Plumbley says that both he and colleagues at other institutions have been involved in efforts to commercialize sound recognition software for applications such as home security, where it might, for instance, respond to the sound of breaking glass. Other uses might include eldercare, to identify potentially alarming deviations from ordinary sound patterns, or to control sound pollution in urban areas. "I really think that there's a lot of potential in the sound-recognition area," he says.

Published December 2016

Rate this article

View our terms of use and privacy policy